Evaluating Combinatorial Generalization in Variational Autoencoders

Bozkurt, Esmaeili, et al. Northeastern University

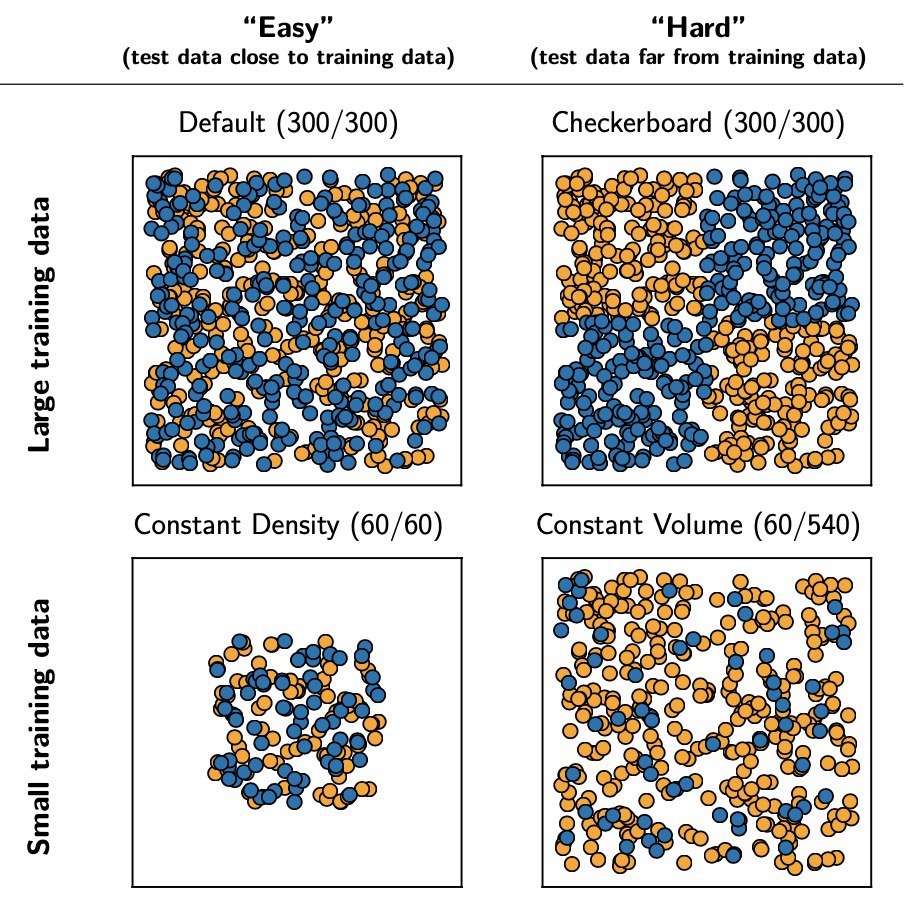

arxiv.org/abs/1911.04594The paper studies how well shallow and deep VAEs are able to generalize in different dataset split settings. They try two different dataset split techniques: “easy” and “hard” generalization problem and change dataset size “small dataset” vs. “big dataset.”

VAEs are trained to autoencode MNIST images.

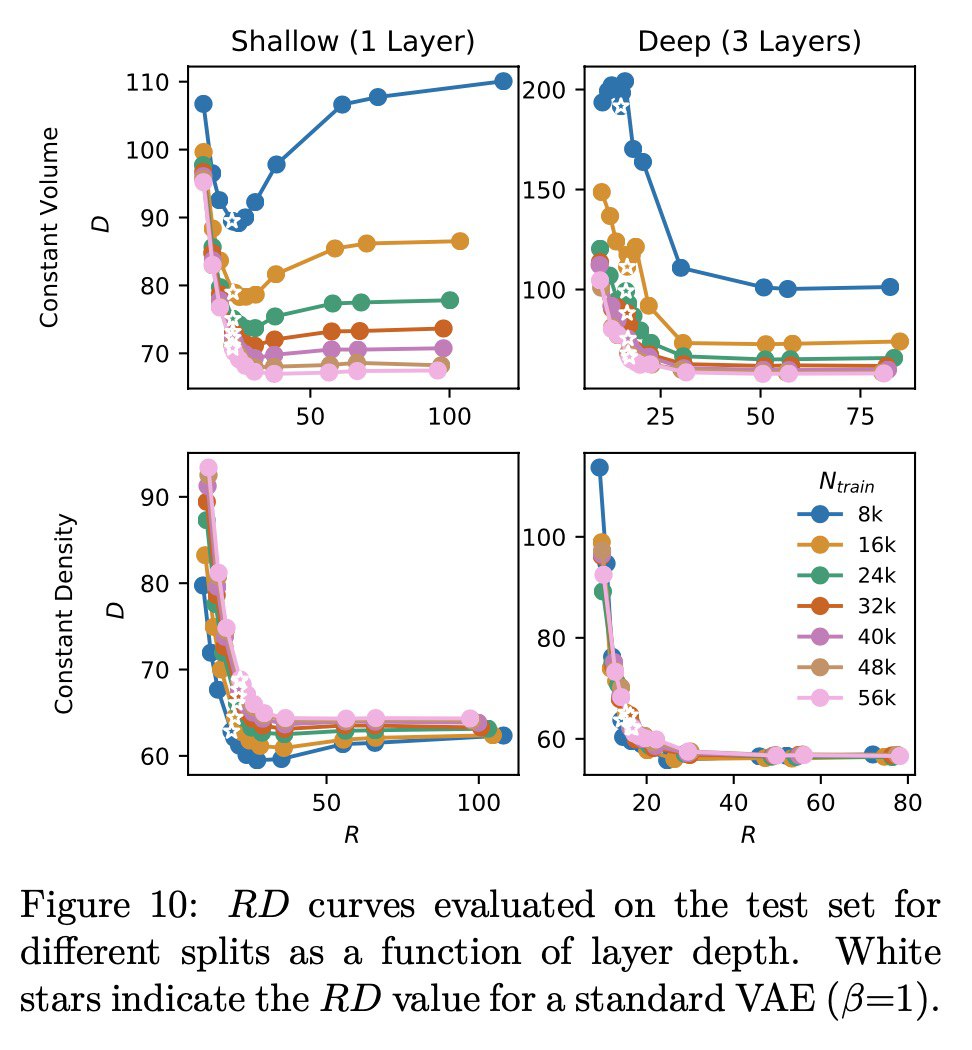

First, they study how well VAE memorizes the training set. Deep models memorize it more then shallow and reuse memorized examples to extrapolate to reconstruct unseen data. Particularly, they find that the reconstructions of unseen data (e.g., some class in MNIST that was absent during training) are closer to training examples in a deep model.

Their study is consistent with the work of Belkin et al. 2018 in the case of “easy” generalization - deep models generalize better with increased capacity. But in the case of “hard” generalization, deeper models perform worse as the capacity increases.

Also, they found that increasing data amount helps deep models to generalize much more than it helps shallow.

I think this is the first paper in a long time with both MNIST and exciting findings.