DM

Size: a a a

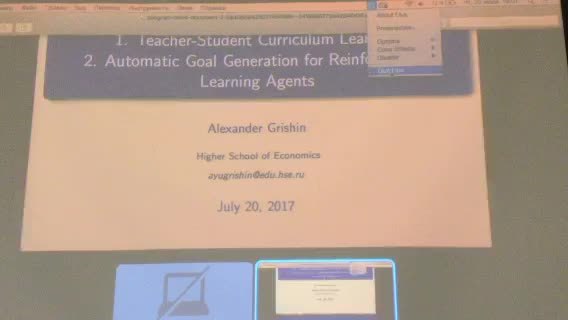

2017 July 20

или есть идеи лучше?

GM

Если будет трансляция в перископе, это будет невероятно круто, а то из офиса на водном стадионе очень трудоёмко добираться

P

Коллеги, давайте в общем чате постараемся максимально воздерживаться от стикеров и сообщений, не касающихся предметной области.

Мне поступают жалобы, что становится все сложнее вылавливать из чата важную информацию.

Мне поступают жалобы, что становится все сложнее вылавливать из чата важную информацию.

AM

Коллеги, давайте в общем чате постараемся максимально воздерживаться от стикеров и сообщений, не касающихся предметной области.

Мне поступают жалобы, что становится все сложнее вылавливать из чата важную информацию.

Мне поступают жалобы, что становится все сложнее вылавливать из чата важную информацию.

ок, удалил)

VS

https://arxiv.org/pdf/1706.08224v1.pdf

Sanjeev Arora and Yi Zhan

Do GANS (Generative Adversarial Nets) actually learn the target distribution? The foundational

paper of (Goodfellow et al 2014) suggested they do, if they were given “sufficiently

large” deep nets, sample size, and computation time. A recent theoretical analysis in Arora

et al (to appear at ICML 2017) raised doubts whether the same holds when discriminator has

finite size. It showed that the training objective can approach its optimum value even if the

generated distribution has very low support —in other words, the training objective is unable

to prevent mode collapse.

The current note reports experiments suggesting that such problems are not merely theoretical.

It presents empirical evidence that well-known GANs approaches do learn distributions

of fairly low support, and thus presumably are not learning the target distribution. The main

technical contribution is a new proposed test, based upon the famous birthday paradox, for

estimating the support size of the generated distribution.

Sanjeev Arora and Yi Zhan

Do GANS (Generative Adversarial Nets) actually learn the target distribution? The foundational

paper of (Goodfellow et al 2014) suggested they do, if they were given “sufficiently

large” deep nets, sample size, and computation time. A recent theoretical analysis in Arora

et al (to appear at ICML 2017) raised doubts whether the same holds when discriminator has

finite size. It showed that the training objective can approach its optimum value even if the

generated distribution has very low support —in other words, the training objective is unable

to prevent mode collapse.

The current note reports experiments suggesting that such problems are not merely theoretical.

It presents empirical evidence that well-known GANs approaches do learn distributions

of fairly low support, and thus presumably are not learning the target distribution. The main

technical contribution is a new proposed test, based upon the famous birthday paradox, for

estimating the support size of the generated distribution.

EZ

deepmind как всегда радуют

DM

В какой аудитории? Гарвард? Или ещё не собрались?

P

Мы сегодня неожиданно в ПРИНСТОНЕ, если что : )

F

А скачать потом можно будет? Или на Ютуб?

DM

понятия не имею, вроде 2 дня видео хранится

DM

для ютуба слишком уж плохое качество - просто ipad поставили, а не камеру

2017 July 21

P

К слову про упомянутый proximal policy gradient

https://blog.openai.com/openai-baselines-ppo/

Learning from Demonstrations for Real World Reinforcement Learning (https://arxiv.org/abs/1704.03732) – deepmind teaches DQN to use data from previos control of the system to pretrain and accelerate future learning

https://blog.openai.com/openai-baselines-ppo/

Learning from Demonstrations for Real World Reinforcement Learning (https://arxiv.org/abs/1704.03732) – deepmind teaches DQN to use data from previos control of the system to pretrain and accelerate future learning

AG

Evgeniy, не могли бы вы, пожалуйста, отписаться в расписании, чтобы иметь возможность найти статью/презентацию в случае нужды

DM

Вчерашняя запись: https://yadi.sk/i/7uN2ABOh3LGKoR

скачал как оно было в перископе, некоторое время полежит на я.диске

скачал как оно было в перископе, некоторое время полежит на я.диске

АС

Может на ютуб залить?

DM

мне стыдно, оно плохого качества, много посторонней фигни в микрофоне и т.п.

DM

лучше чем ничего для своих, кто не смог прийти, но чтобы выкладывать в public - очень плохо

AP

может создать канал специально?