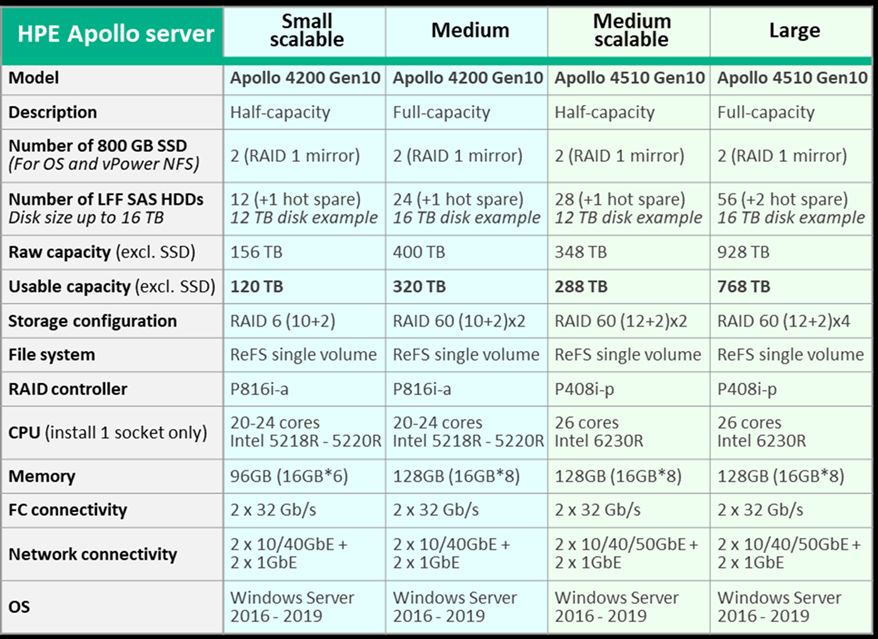

Лаба HPE тоже протестировала Apollo 4510 с 11-й версией, в принципе результаты такие же, но конфигурация может быть даже слабее, CPU одного достаточно и памяти поменьше может быть. И один Smart Array из трех тоже убрать можно.

Best Practice UPDATE Veeam: VBR V11-Beta1 OS: Windows 2019 Server - SmartArray: one P408i - Data Disks: 58 * 16RB 12G SAS disks. Please note: we are now recommending to use SAS drives instead of SATA because they offer higher reliability and performance at a little price difference - Boot Disks: 2*800GB 12G SAS SSD on LFF carrier configured as RAID1 (mirror). The 2 SSD are installed on the main drawers and connected to the same P408i controller. Please note this is different from the previous best practice where the two SSD were installed on the CPU blade and connected to an additional P408a controller. The previous configuration is still valid, the new configuration is intended only for reducing the cost associated to the additional P408a controller. - RAID configuration for data drives: RAID-60 as 4 Parity-Groups [4*(12+2)], plus 2 global hot-spares. Please note: after testing different options, the best RAID Strip size is now 256KB and not 128KB with the previously tested 12GB SATA drives. - Data File System: a single large ReFS formatted with 64KB pages. This has not changed - CPU: 1 * Intel 6230R (26 cores) Please note: this configuration is designed to maximize the backup/restore performance, and Veeam VBR can push the system throughput to levels that require a lot of CPU cycles: do not downsize the CPU configuration. We tested configurations with one CPU socket only. Veeam engineering advised that V11 has improved optimization for dual CPU servers. We have not prioritized testing of dual CPU configurations because the current one can already provide enough compute performance, but we might test them in the future. - RAM: 128GB as 8 * 16GB modules. Please note that V11 requires less RAM and for this reason we have reduced the recommended memory. For best performance it is necessary to use at least 6 DIMMs per Socket, for this reason the 8*16GB is to be preferred to the 4*32GB configuration - Veeam backup Job block size storage optimization: use normal “Local target” on Backup-Job --> Storage section --> Advanced setting --> Storage tab --> “Storage optimization” field. Please note that we tested both “Local target” and “Local target (large blocks) and we have measured equivalent backup performance. With “Local target” the physical write speed is a little higher, but it is compensated by the lower compression that is possible with smaller blocks. We suggest using “Local target” because the Incremental backup size uses to require less capacity in most use cases. Smaller write chunks can potentially create more File System fragmentation over time, but this aspect was not included in our testing.